Six Dimensions of Operational Adequacy in AGI Projects — LessWrong

Por um escritor misterioso

Descrição

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

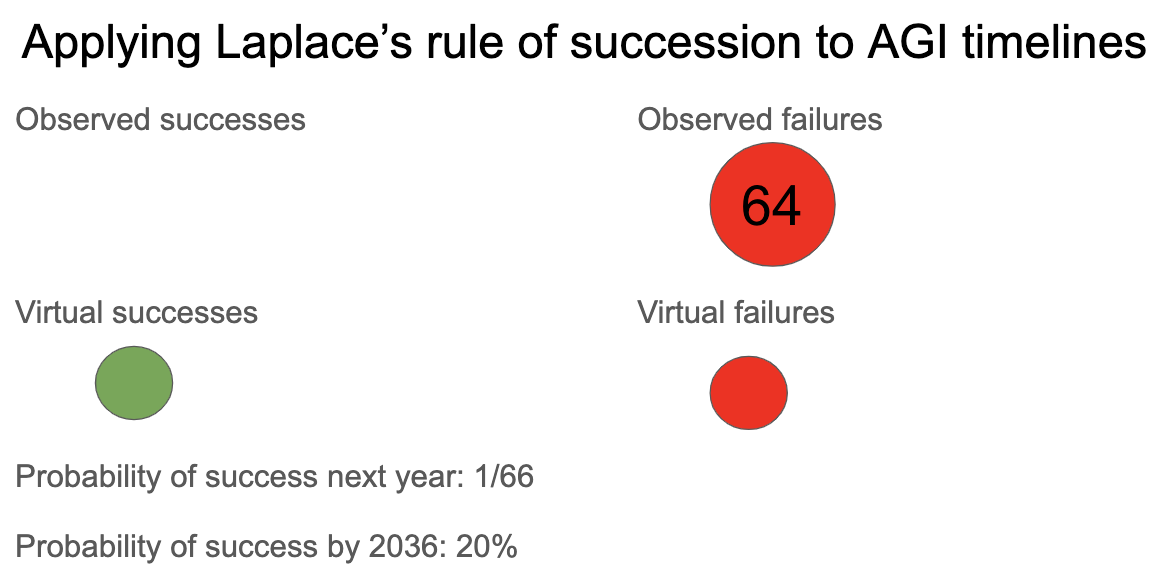

Semi-informative priors over AI timelines

25 of Eliezer Yudkowsky Podcasts Interviews

Challenges to Christiano's capability amplification proposal

OpenAI, DeepMind, Anthropic, etc. should shut down. — EA Forum

AI Safety is Dropping the Ball on Clown Attacks — LessWrong

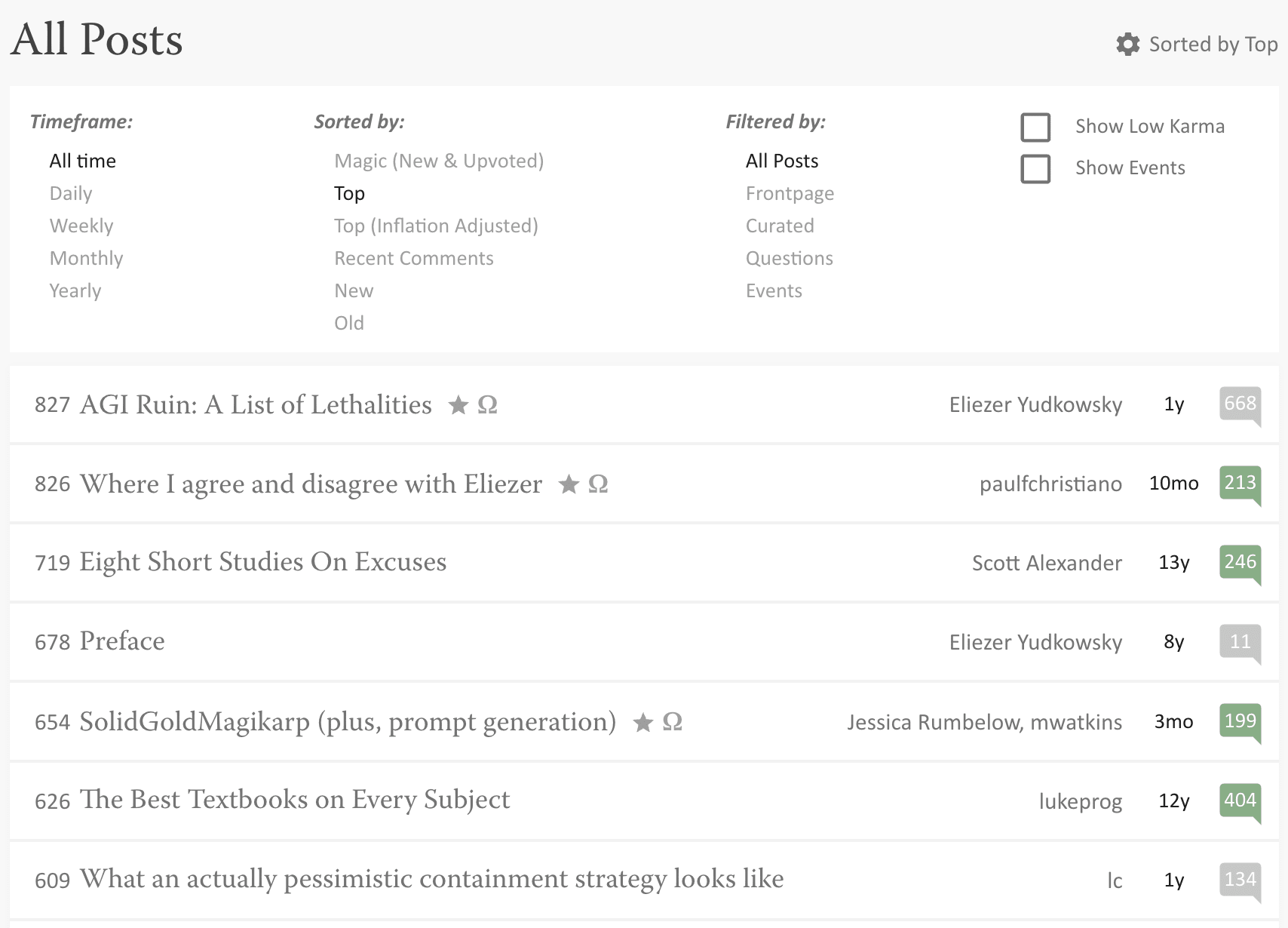

AGI Ruin: A List of Lethalities — AI Alignment Forum

The Nonlinear Library

PDF) CONVERGENCE IN MISSISSIPPI: A SPATIAL APPROACH.

Embedded World-Models - Machine Intelligence Research Institute

Linkpost] Introducing Superalignment — LessWrong

AGI rising: why we are in a new era of acute risk and increasing

Everything I Need To Know About Takeoff Speeds I Learned From Air

25 of Eliezer Yudkowsky Podcasts Interviews

AGI Ruin: A List of Lethalities - LessWrong 2.0 viewer

de

por adulto (o preço varia de acordo com o tamanho do grupo)