Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Por um escritor misterioso

Descrição

How can we optimize CPU for deep learning models' performance? This post discusses model efficiency and the gap between GPU and CPU inference. Read on.

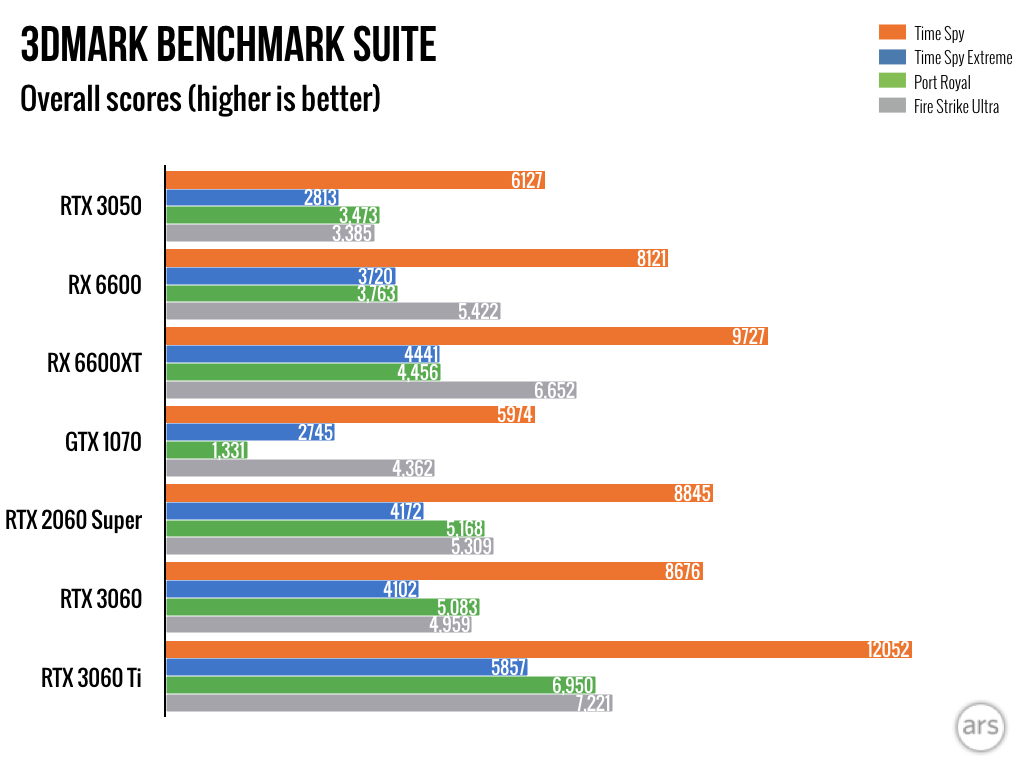

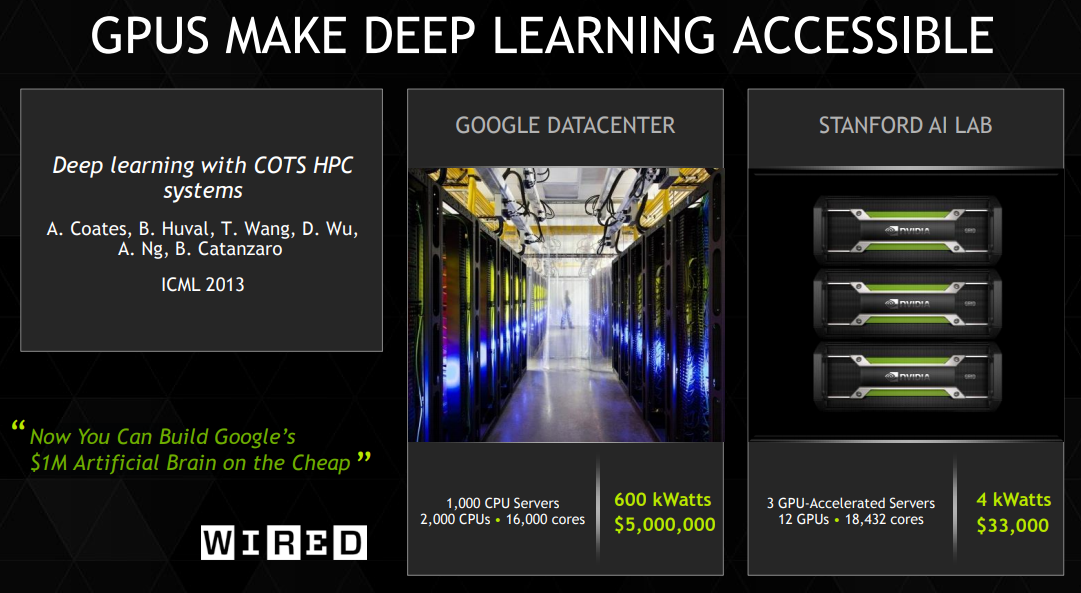

Hardware for Deep Learning. Part 3: GPU, by Grigory Sapunov

Israeli startup Deci turns to Insight Partners to help produce AI models on steroids

Deci's New Family of Models Delivers Breakthrough Deep Learning Performance on CPU

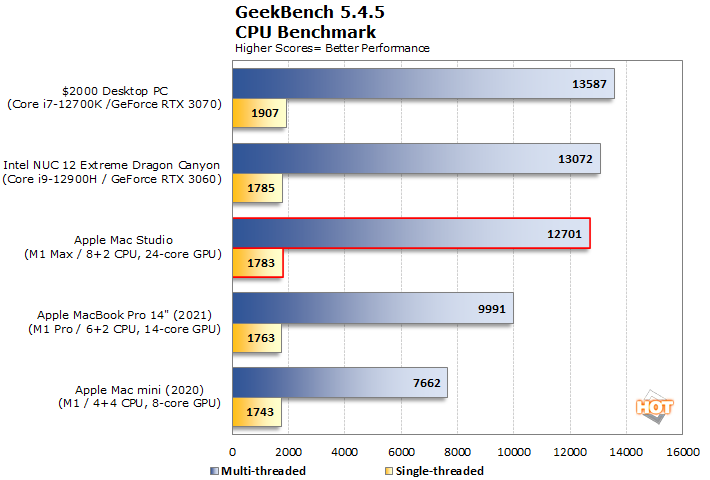

PDF) Comparative Analysis of CPU and GPU Profiling for Deep Learning Models

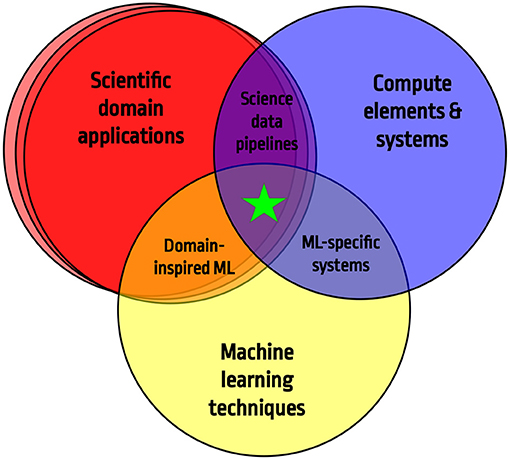

Frontiers Applications and Techniques for Fast Machine Learning in Science

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs

Measuring Neural Network Performance: Latency and Throughput on GPU, by YOUNESS-ELBRAG

Does a CPU/GPU's performance affect a machine learning model's accuracy? - Quora

A Full Hardware Guide to Deep Learning — Tim Dettmers

Vector Processing on CPUs and GPUs Compared, by Erik Engheim

A Full Hardware Guide to Deep Learning — Tim Dettmers

Nvidia RTX 4080 12GB Is Up to 30% Slower Than 16GB Model, Benchmarks Show

A Full Hardware Guide to Deep Learning — Tim Dettmers

DeciNets AI models arrive with Intel CPU optimization

de

por adulto (o preço varia de acordo com o tamanho do grupo)