No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

Por um escritor misterioso

Descrição

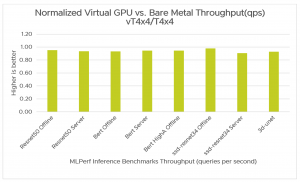

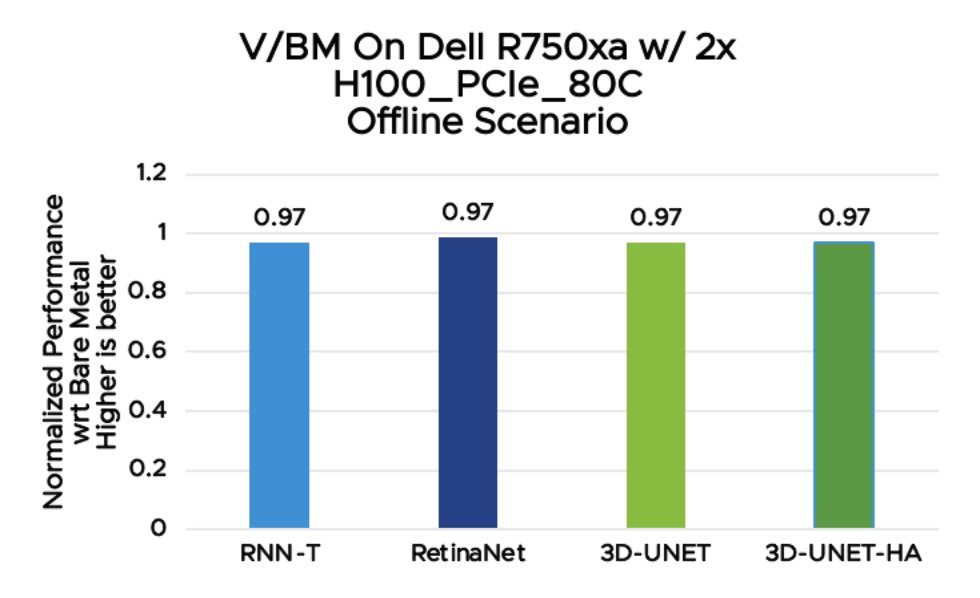

In this blog, we show the MLPerf Inference v3.0 test results for the VMware vSphere virtualization platform with NVIDIA H100 and A100-based vGPUs. Our tests show that when NVIDIA vGPUs are used in vSphere, the workload performance is the same as or better than it is when run on a bare metal system.

NVIDIA Posts Big AI Numbers In MLPerf Inference v3.1 Benchmarks With Hopper H100, GH200 Superchips & L4 GPUs

Getting the Best Performance on MLPerf Inference 2.0

Winning MLPerf Inference 0.7 with a Full-Stack Approach

VMware and NVIDIA solutions deliver high performance in machine learning workloads - VROOM! Performance Blog

virtualization Archives - VROOM! Performance Blog

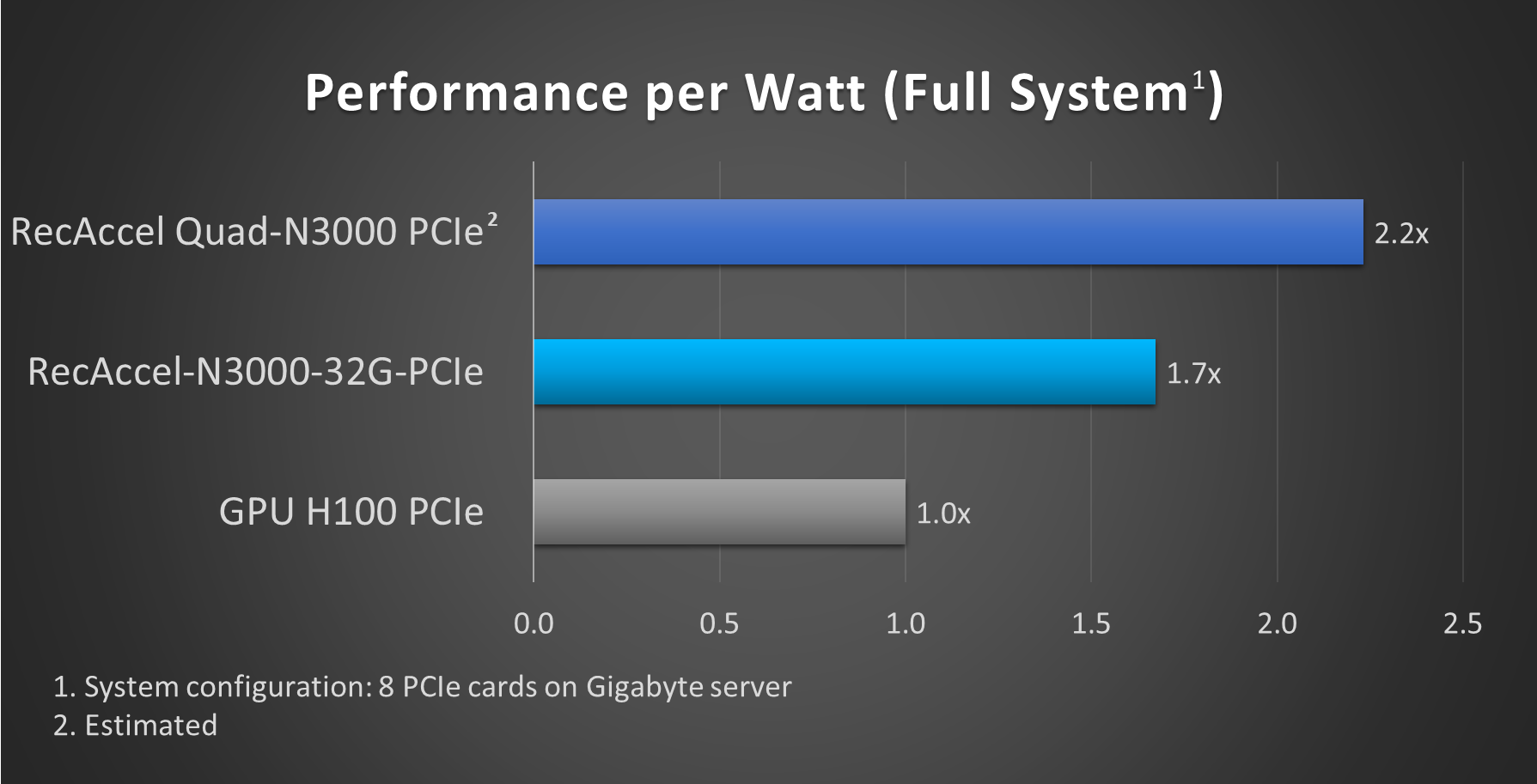

NEUCHIPS RecAccel N3000 Delivers Industry Leading Results for MLPerf v3.0 DLRM Inference Benchmarking

Nvidia Shows Off Grace Hopper in MLPerf Inference - EE Times

Leading MLPerf Inference v3.1 Results with NVIDIA GH200 Grace Hopper Superchip Debut

Virtualization Archives - VROOM! Performance Blog

MLPerf Inference Virtualization in VMware vSphere Using NVIDIA vGPUs - VROOM! Performance Blog

Uday Kurkure – VROOM! Performance Blog

MLPerf Inference Virtualization in VMware vSphere Using NVIDIA vGPUs - VROOM! Performance Blog

VMware Performance (@vmwarevroom) / X

de

por adulto (o preço varia de acordo com o tamanho do grupo)

:max_bytes(150000):strip_icc()/GPUBenchmarking01-e4874c6c264d41018e68885badb39ed3.jpg)