Visualizing the gradient descent method

Por um escritor misterioso

Descrição

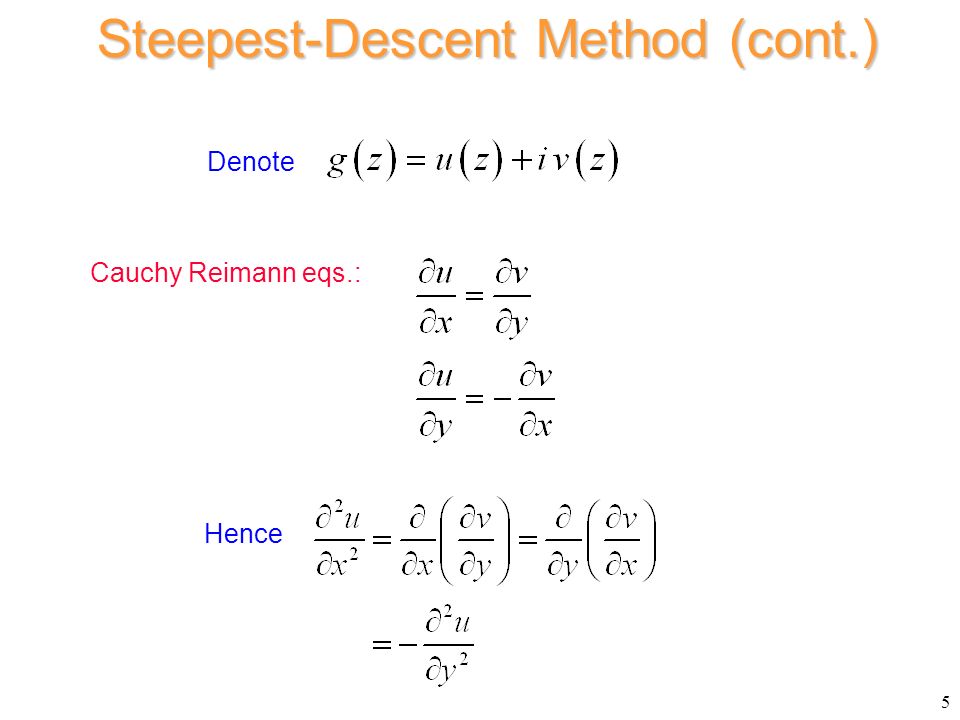

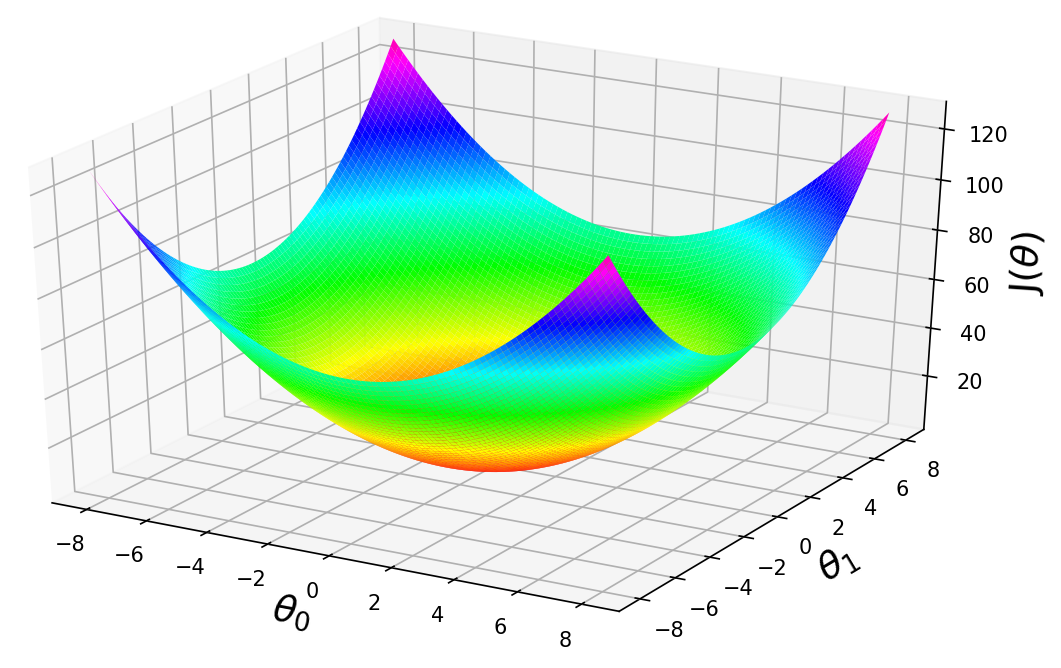

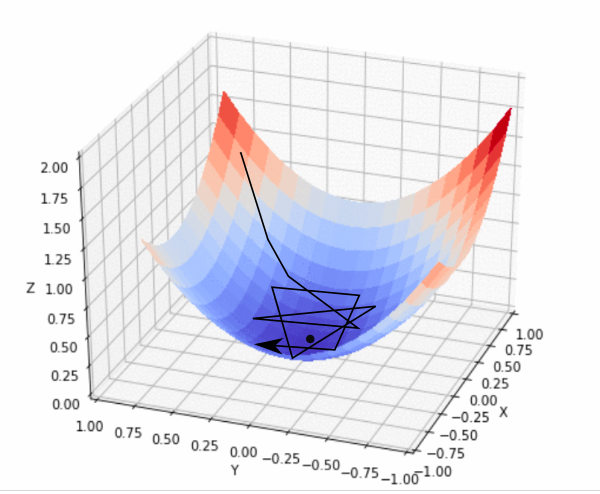

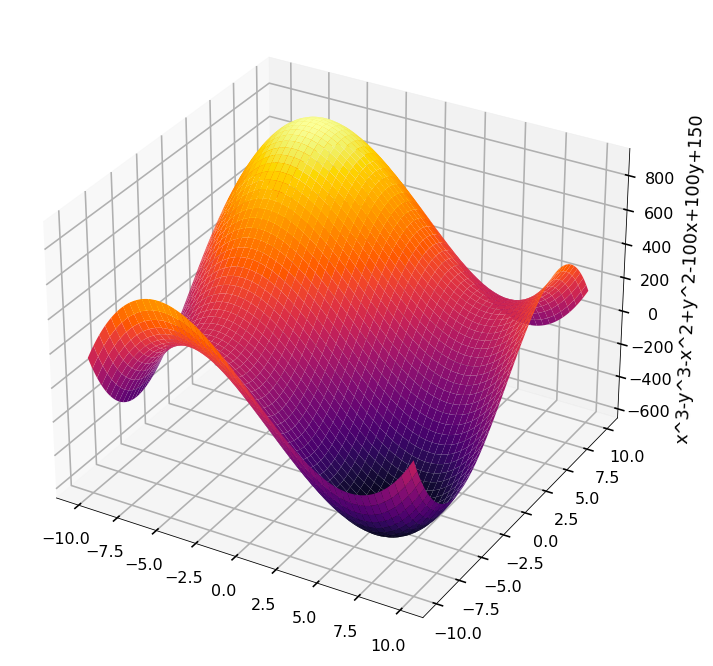

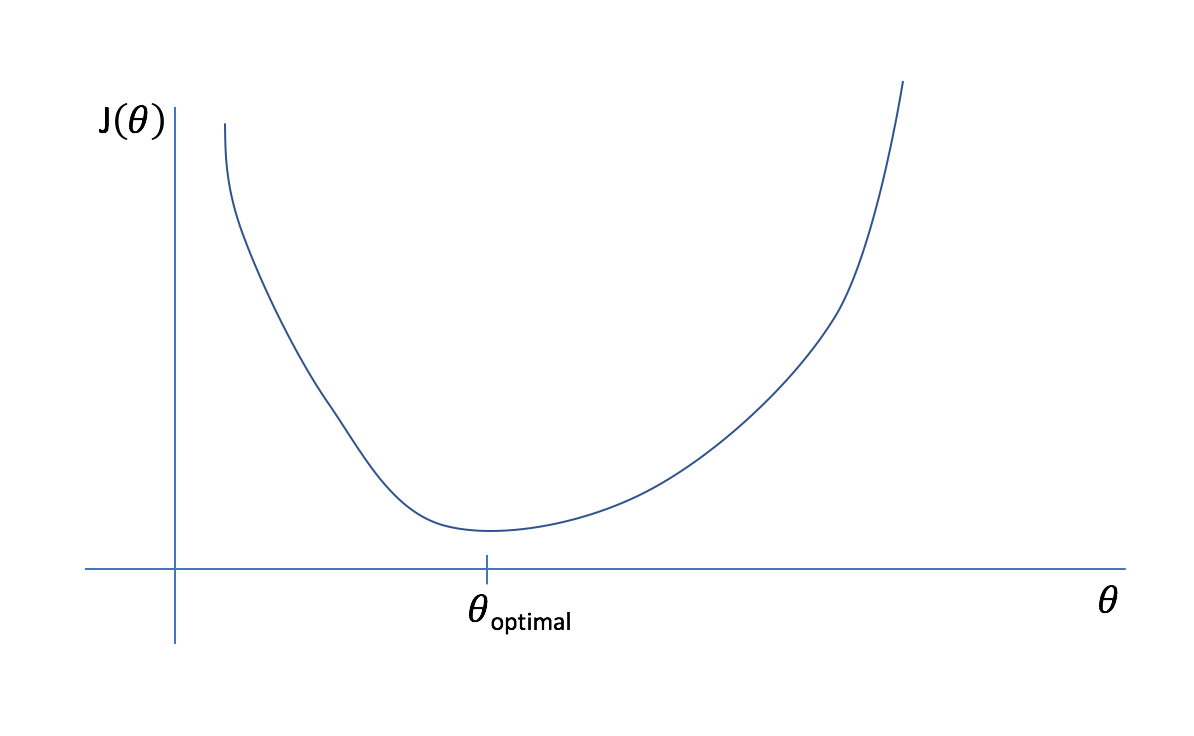

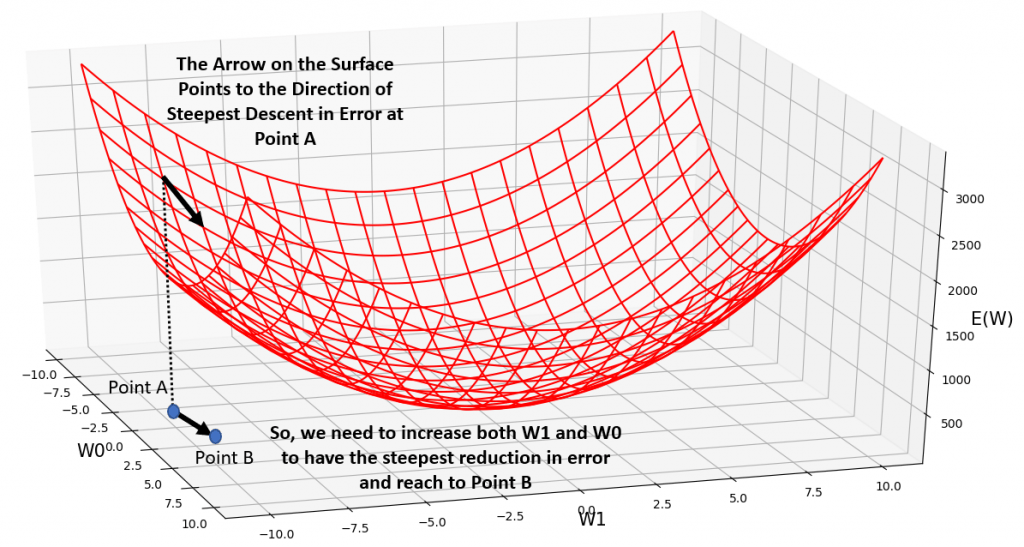

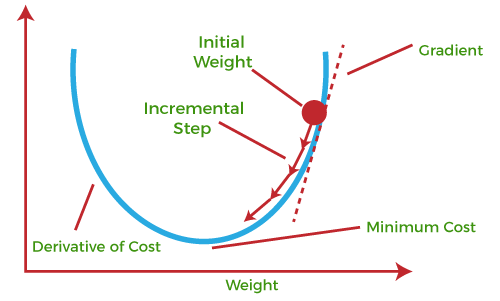

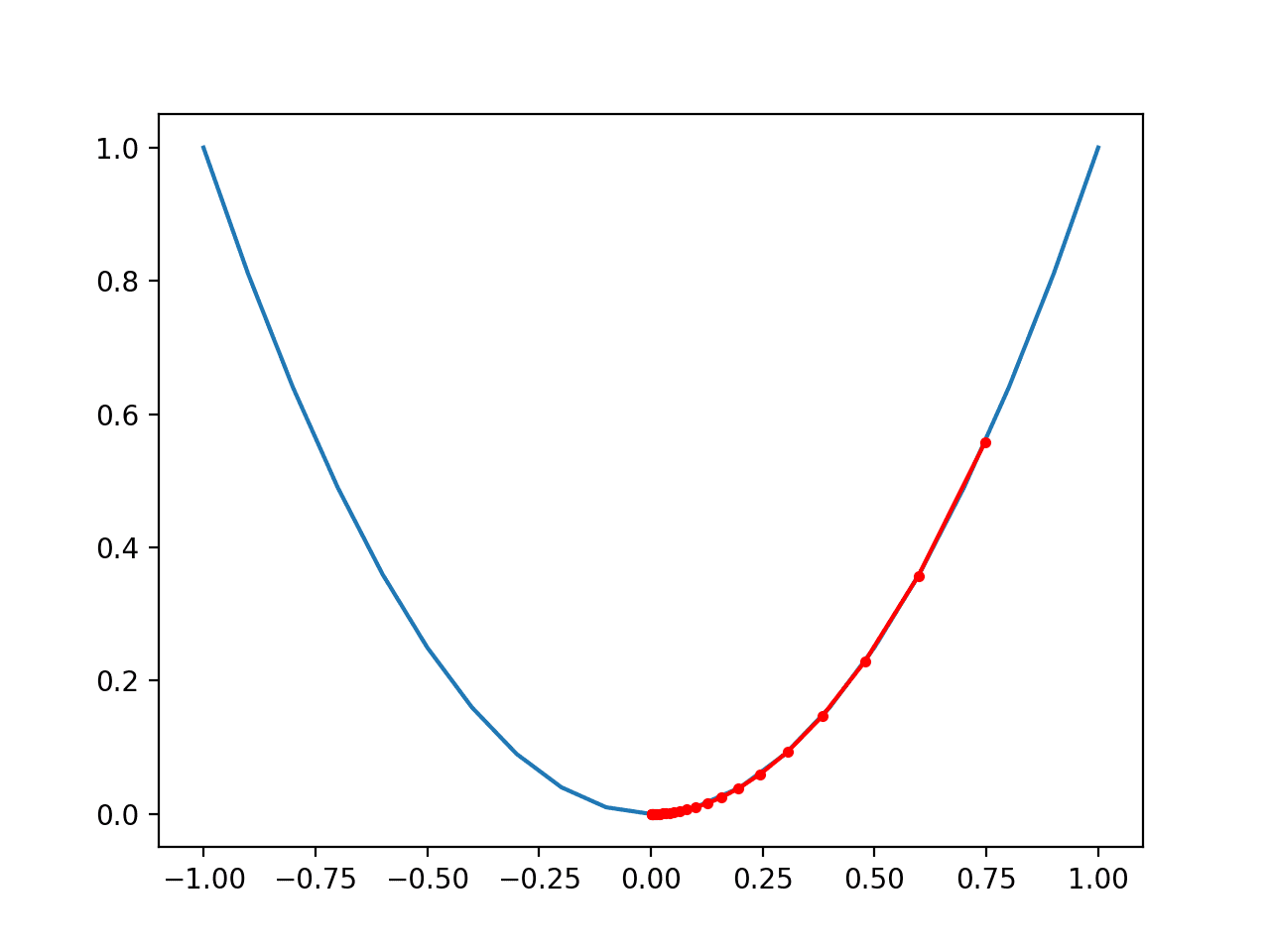

In the gradient descent method of optimization, a hypothesis function, $h_\boldsymbol{\theta}(x)$, is fitted to a data set, $(x^{(i)}, y^{(i)})$ ($i=1,2,\cdots,m$) by minimizing an associated cost function, $J(\boldsymbol{\theta})$ in terms of the parameters $\boldsymbol\theta = \theta_0, \theta_1, \cdots$. The cost function describes how closely the hypothesis fits the data for a given choice of $\boldsymbol \theta$.

What is meant by gradient descent in laymen terms? - Quora

Guide to Gradient Descent Algorithm: A Comprehensive implementation in Python - Machine Learning Space

Intro to optimization in deep learning: Gradient Descent

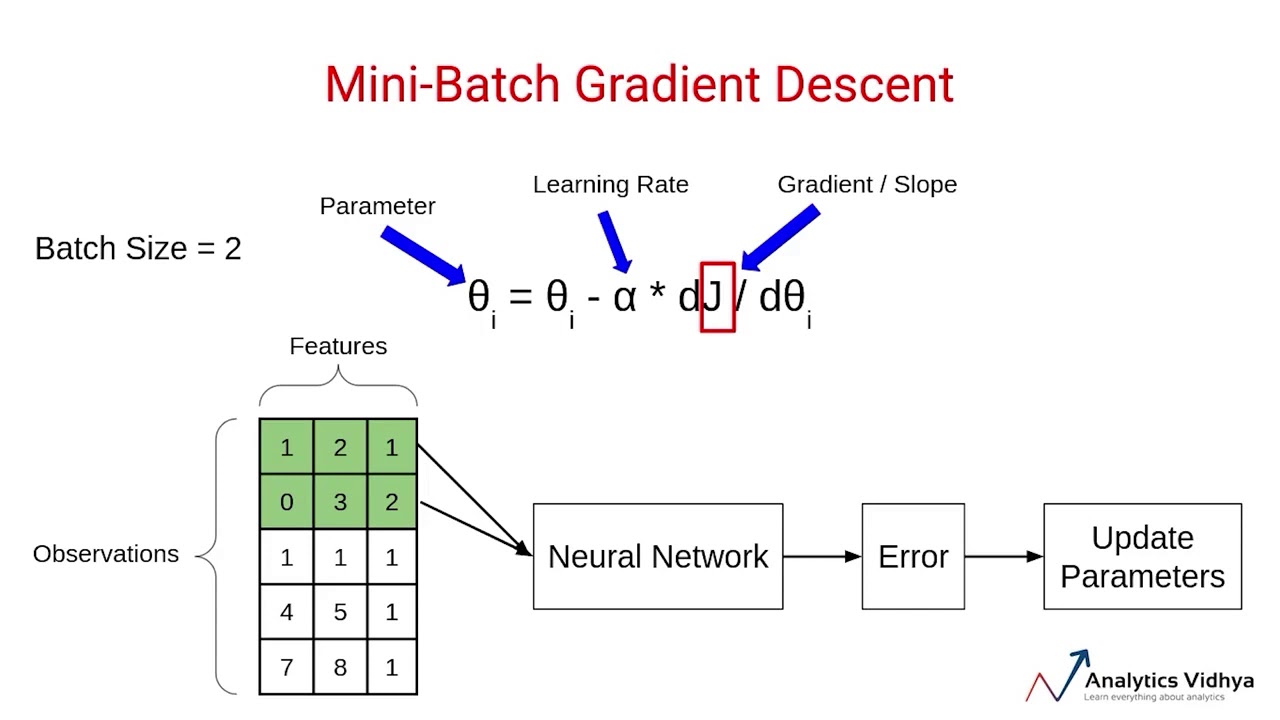

Variants of Gradient Descent Algorithm

How to visualize Gradient Descent using Contour plot in Python

Visualizing Gradient Descent in 3D - Part 1 2022 - fast.ai Course Forums

Gradient Descent Visualization - Martin Kondor

Gradient Descent in Machine Learning, by Francesco Franco

Gradient descent.

Deriving the Gradient Descent Rule (PART-1)

Gradient Descent in Machine Learning - Javatpoint

Gradient-Based Optimizers in Deep Learning - Analytics Vidhya

Vanishing and Exploding Gradients in Neural Network Models: Debugging, Monitoring, and Fixing

Gradient Descent With Momentum from Scratch

de

por adulto (o preço varia de acordo com o tamanho do grupo)