optimization - How to show that the method of steepest descent does not converge in a finite number of steps? - Mathematics Stack Exchange

Por um escritor misterioso

Descrição

I have a function,

$$f(\mathbf{x})=x_1^2+4x_2^2-4x_1-8x_2,$$

which can also be expressed as

$$f(\mathbf{x})=(x_1-2)^2+4(x_2-1)^2-8.$$

I've deduced the minimizer $\mathbf{x^*}$ as $(2,1)$ with $f^*

Mathematics, Free Full-Text

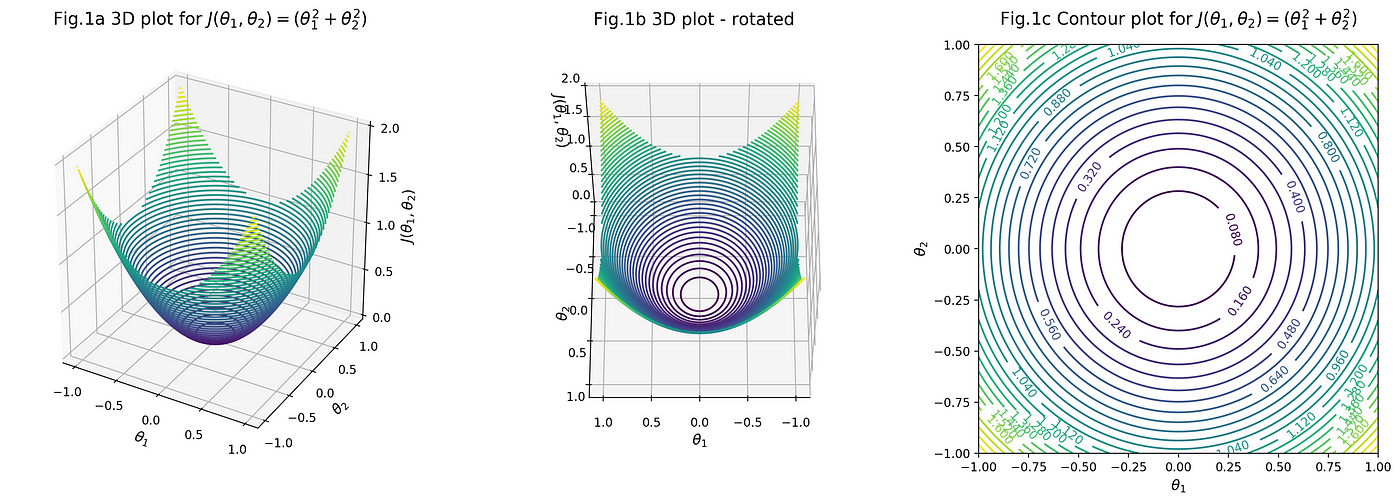

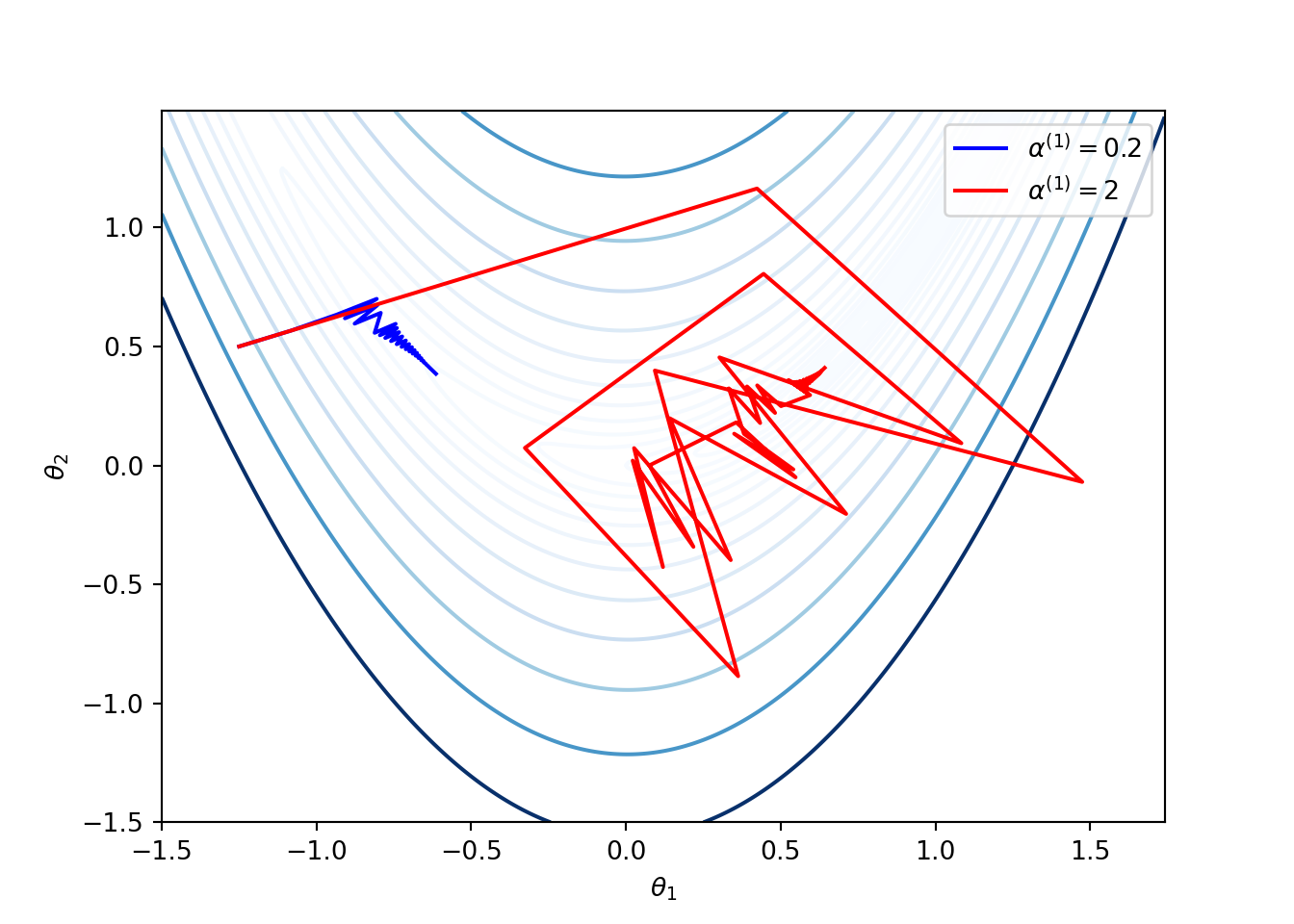

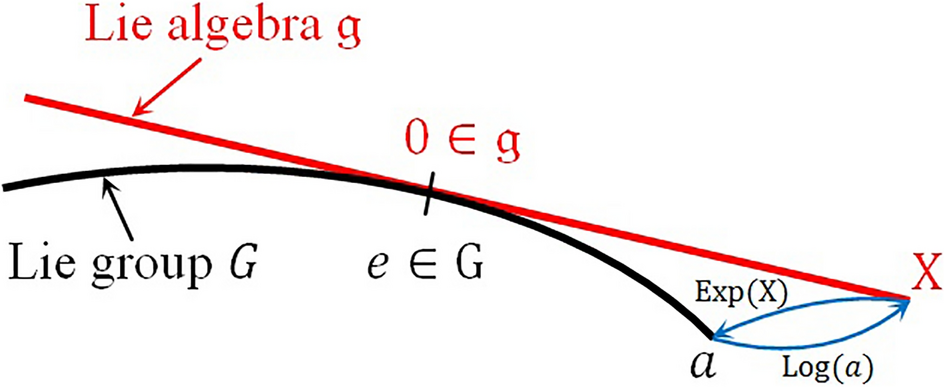

Intuition (and maths!) behind multivariate gradient descent, by Misa Ogura

3 Optimization Algorithms The Mathematical Engineering of Deep Learning (2021)

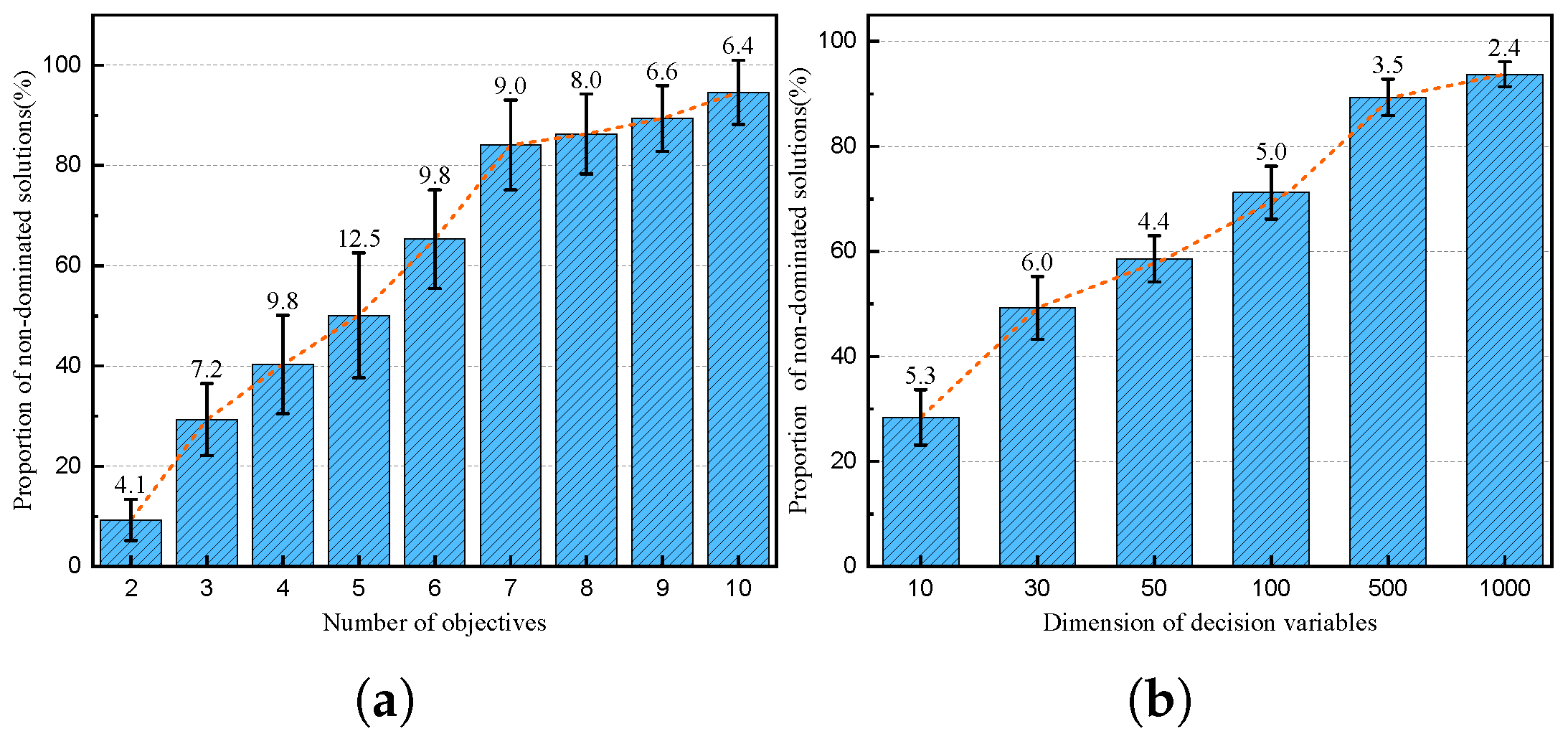

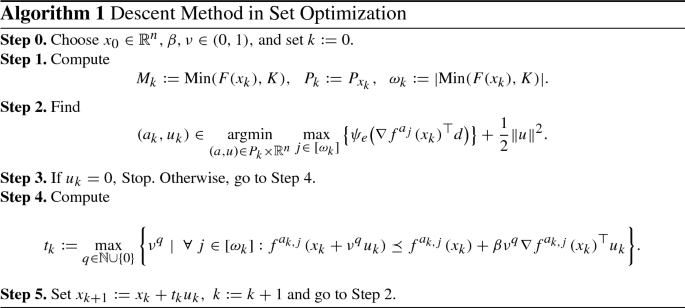

A Steepest Descent Method for Set Optimization Problems with Set-Valued Mappings of Finite Cardinality

Steepest Descent Method - an overview

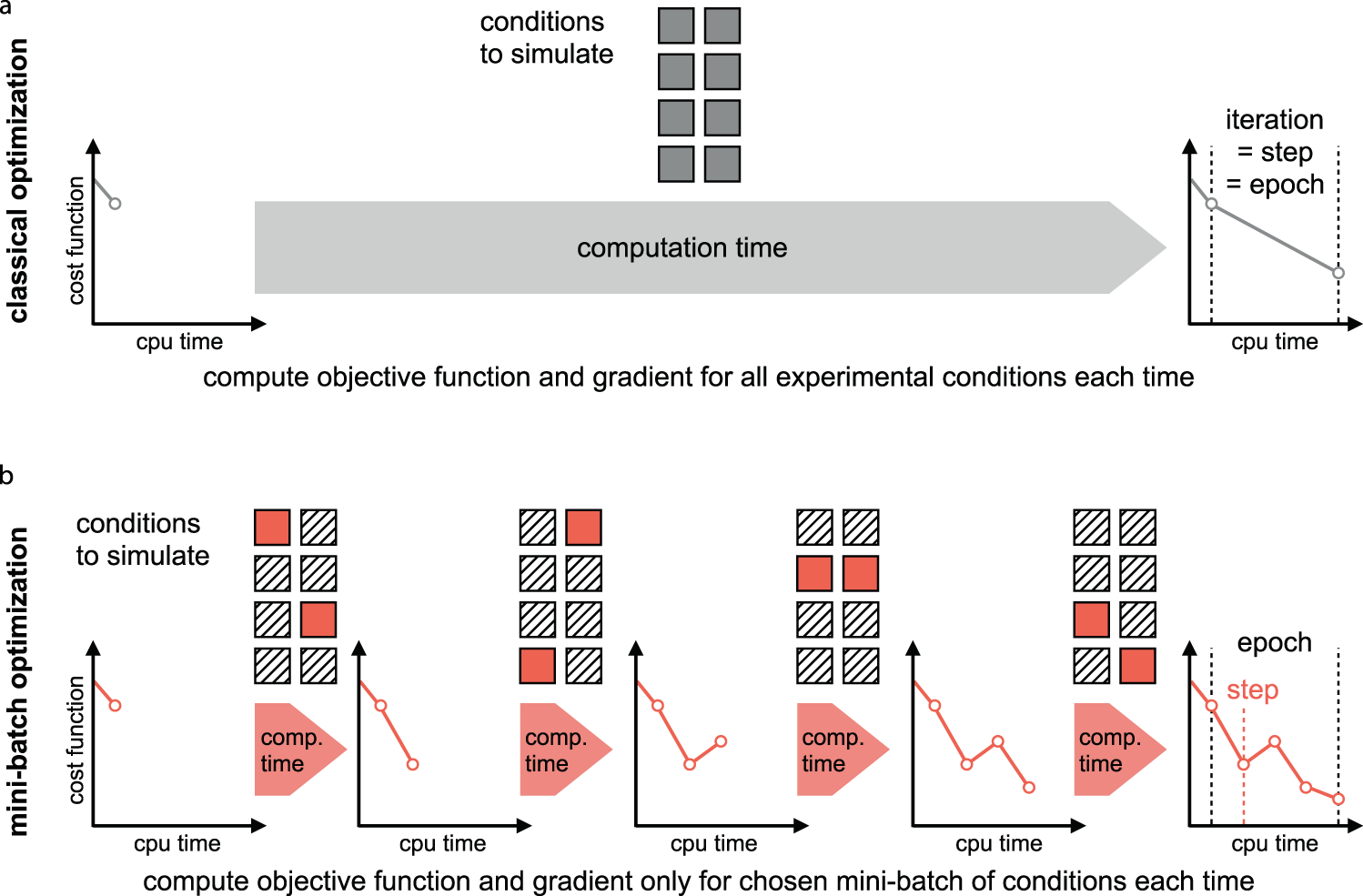

Mini-batch optimization enables training of ODE models on large-scale datasets

ordinary differential equations - Finite-time criterion for ODE - Mathematics Stack Exchange

convergence divergence - Interpretation of Noise in Function Optimization - Mathematics Stack Exchange

Mathematics, Free Full-Text

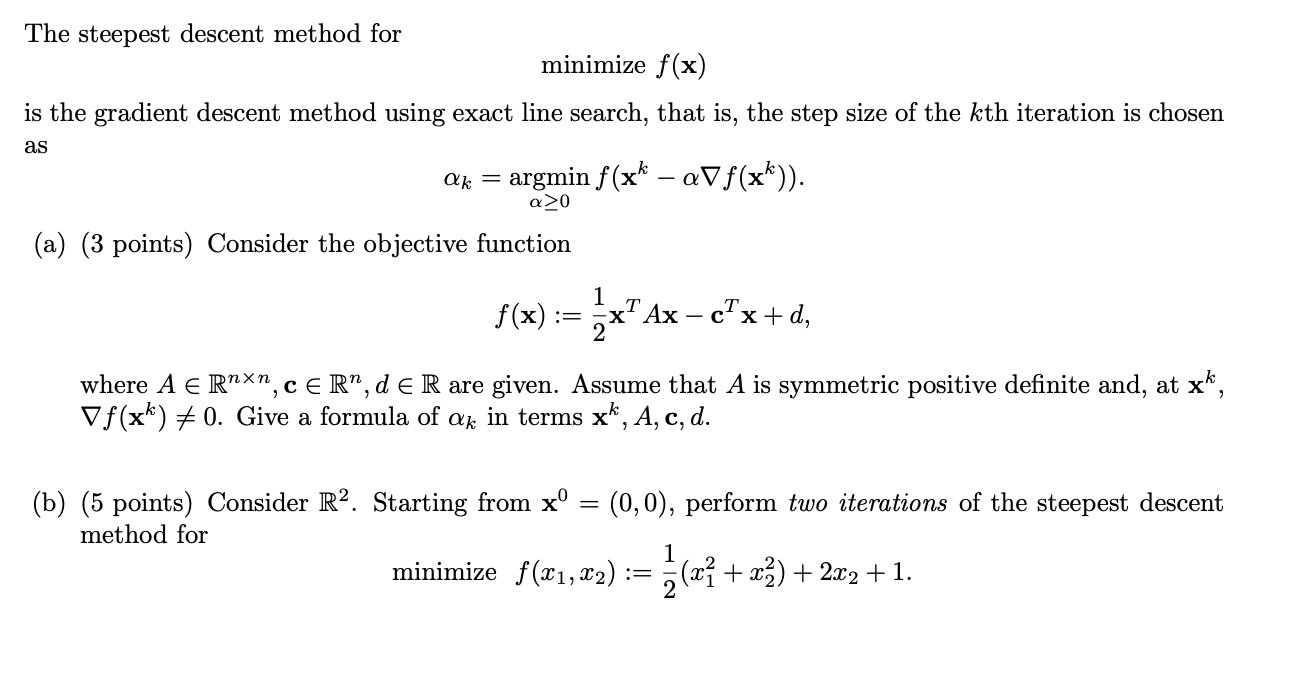

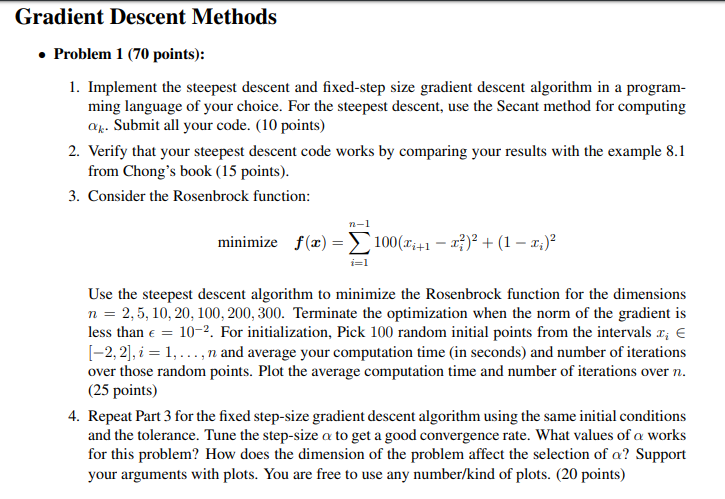

Gradient Descent Methods . Problem 1 (70 points): 1.

Fast gradient algorithm for complex ICA and its application to the MIMO systems

matrix - Gradient Descent: thetas not converging - Stack Overflow

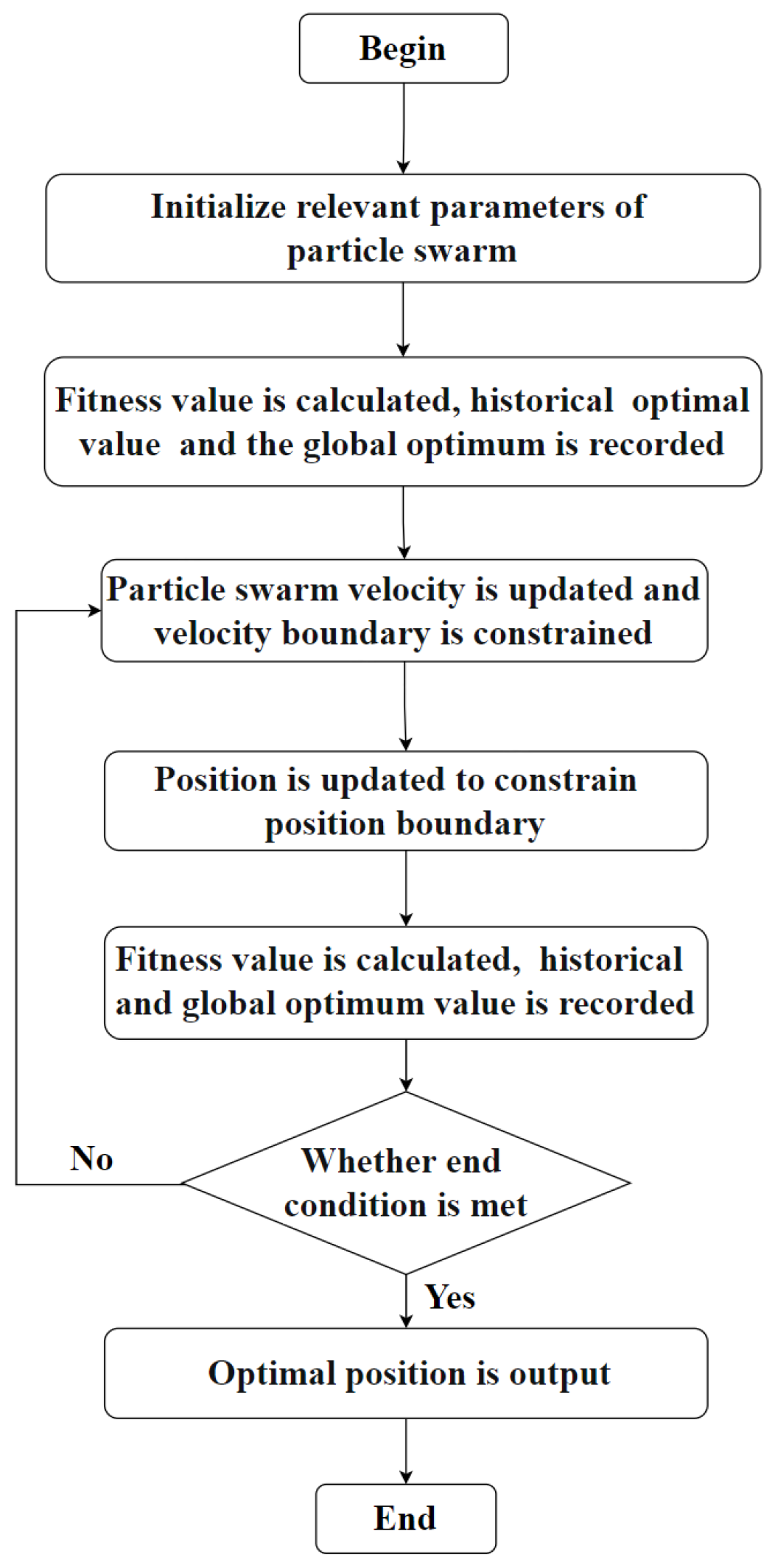

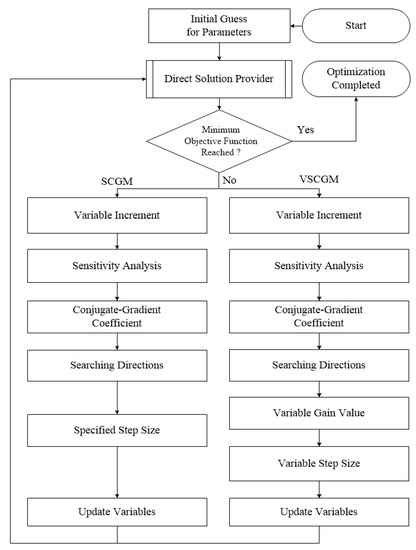

Energies, Free Full-Text

de

por adulto (o preço varia de acordo com o tamanho do grupo)

.png)